Welcome to the Mouse vs AI: Robust Visual Foraging Competition @ NeurIPS 2025 Competition Track.

Announcements:

- Join us on Zoom or Upper Level Ballroom 6CF for the NeurIPS 2025 Workshop (Sun, Dec 7, 11am-2pm PST)

- Congratulations to our inaugural winners: Team HCMUS_TheFangs (Ho Chi Minh City University of Science)!

- Learn more about our competitions by reading our whitepaper

Mice robustly navigate in a range of conditions like fog, maybe more robustly than self-driving cars, which can struggle with out-of-distribution conditions. Build an AI agent that can outcompete a mouse!

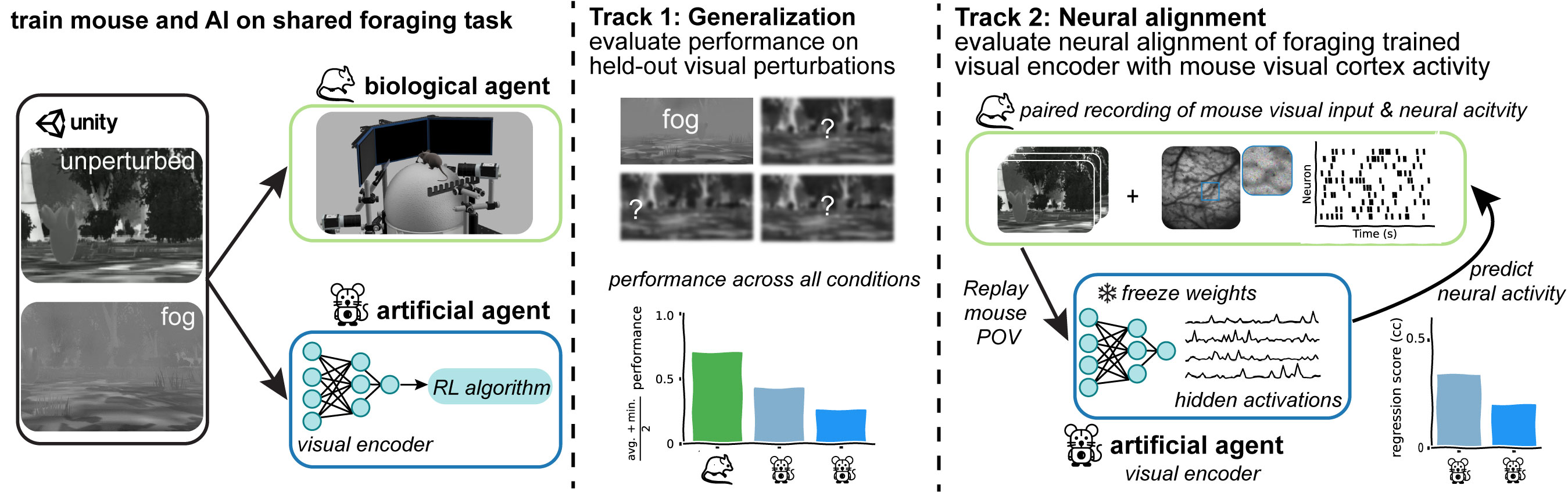

In this competition, we have generated a unique data set that includes hours of mice performing a visual navigation task in a detailed virtual reality (VR) environment (in Unity), under a range of perturbations to the visual stimuli (fog, etc.).

- Track 1 — Visual Robustness: Build an AI agent that interfaces with the same VR game and try to outperform a mouse when faced with various visual perturbations.

- Track 2 — Neural Alignment: We used two-photon imaging to record cortical activity while mice perform the task. Predict those neural responses from your agent’s encoder activations—does it extract the same information?

Competition Overview

Robust visual perception under real-world conditions remains a major bottleneck for AI agents: a modest shift in image statistics can collapse performance that was near-perfect during training. Biological vision, by contrast, is remarkably resilient. Mice trained for only a few hours under a clear scene continue to solve the same visually guided foraging task when degradations are introduced, showing only a modest drop in success.

The Mouse vs AI: Robust Visual Foraging Challenge turns that gap into a quantitative benchmark. Participants train artificial agents in the Unity environment used for the mouse experiments, with access to two conditions (clean and fog). In each 5-second trial the agent receives visual information about the environment through 86 × 155 pixels grayscale images and must reach a randomly placed, visually cued target. During mouse training the target started near the animal and the start distance was increased whenever performance exceeded 70 % success; the provided NormalTrain, FogTrain and RandomTrain builds of the Game implement the same curriculum for agents. A third build, RandomTest, always places the target at the maximum distance, mirroring the final evaluation pipeline with the two provided conditions. The trained agent—visual encoder plus policy—is the single submission used for both tracks.

Track 1 — Visual Robustness

Each submitted agent is evaluated under the two provided conditions and three held-out perturbations never seen during training. Performance is summarised by Average Success Rate (ASR) and Minimum Success Rate (MSR) across all conditions.$$ \displaystyle \text{Score}_{\text{1}} = \frac{\text{ASR} + \text{MSR}}{2} $$

Track 2 — Neural Alignment

To test whether robust behaviour coincides with mouse-like visual representations, we replay the mouse’s video through each frozen visual encoder, extract hidden activations, and fit a linear regression that maps those activations to simultaneously recorded spiking activity from mouse V1 and higher visual areas. Unlike previous alignment challenges—where networks are optimised directly for neural activity prediction—we quantify the emergent alignment of encoders trained solely for foraging. During evaluation we perform linear regression on each layer’s hidden activations and score them via the mean Pearson correlation (ρ) between predicted and recorded neural activity across all N neurons . To avoid an advantage for larger models, the hidden activations are first reduced to 50 dimensions using PCA. The layer with the highest ρ becomes the agent’s final Track 2 score.$$ \text{Score}_{\text{2}}= \frac{1}{N}\sum_{i=1}^{N}\rho_i $$

The overarching goals are therefore (i) to identify architectural and training principles that support visual robustness, and (ii) to test whether those principles also yield mouse-like internal representations. By grounding evaluation in both behaviour and cortical activity, the challenge unifies reinforcement learning, robust computer vision, and systems neuroscience within a single task.

Download & Quick Start

Choose the build for your platform:

| Platform | Dowload Link |

|---|---|

| Windows (GUI) | Windows v1.0 |

| macOS (GUI) | macOS v1.0 |

| Linux (GUI) | Linux v1.0 |

- Download the environment

- Install dependencies

- Train your first agent

- Submit your model — Package your trained models and follow the Submission Guide.

Timeline

| Date | Milestone |

|---|---|

| July 1, 2025 | Starter kit released |

| July 15, 2025 | Competition officially begins |

| Nov 15, 2025 | Final submission deadline |

| Nov 22, 2025 | Evaluation + winners announced |

| Dec 2025 | Results at NeurIPS 2025 |

Communication

For questions, updates, and discussion, please Join the Discord

You can also reach us via email at robustforaging@gmail.com